Common problems

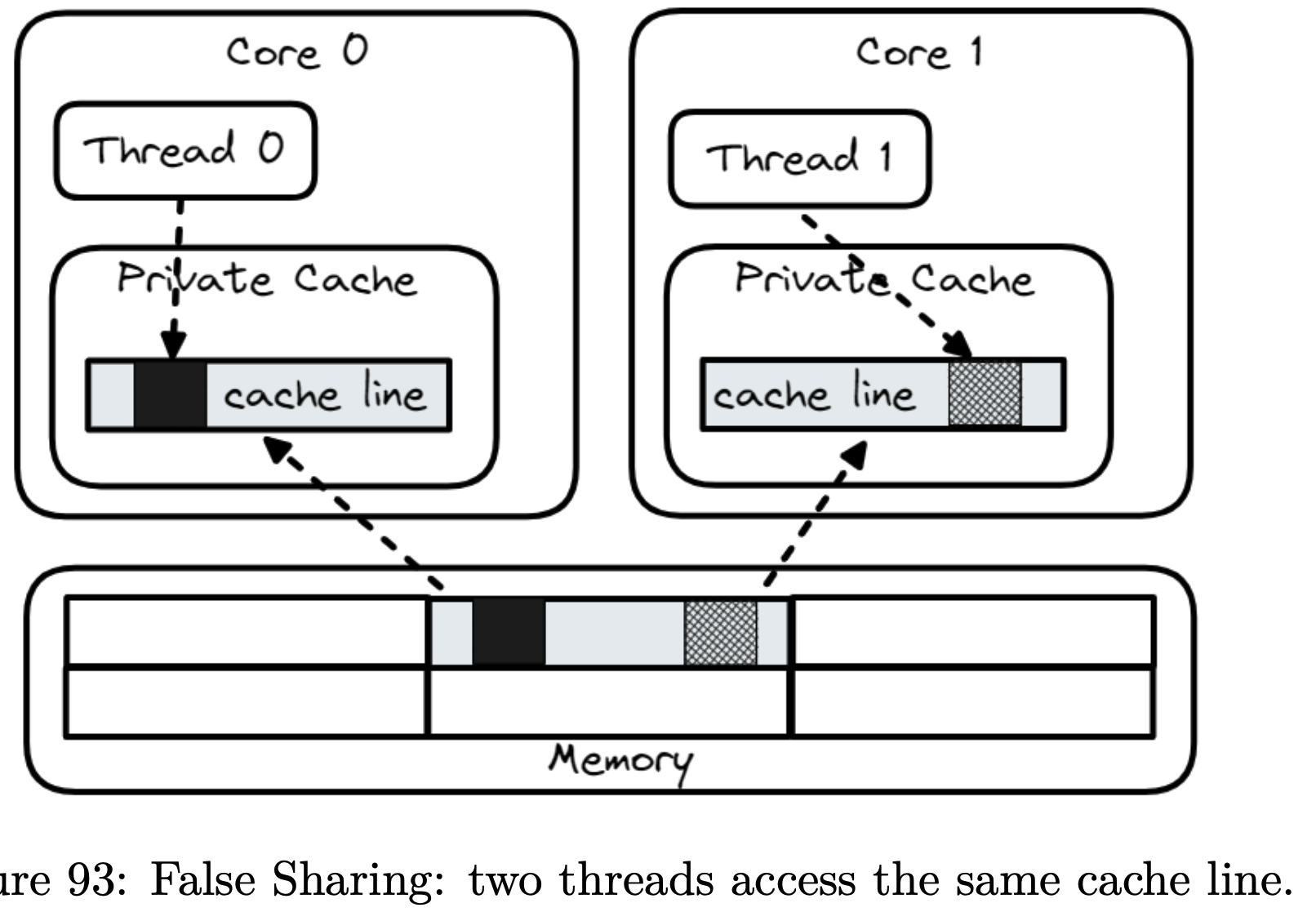

A common problem in modern architectures with multiprocessor caches is called false sharing. This occurs when each individual processor is attempting to use data in another memory region and attempts to store it in the same cache line. This causes the cache line — which contains data another processor can use — to be overwritten again and again. Effectively, different threads make each other wait by inducing cache misses in this situation. See also: How and when to align to cache line size?

Results in high latency.

Example

struct S {

int sumA; // sumA and sumB are likely to

int sumB; // reside in the same cache line

};

S s;

{

// code executed by thread A

for (int i = 0; i < N; i++)

s.sumA += a[i];

}

{

// code executed by thread B

for (int i = 0; i < N; i++)

s.sumB += b[i];

}

Fix

type atomicLimiter struct {

state unsafe.Pointer

//lint:ignore U1000 Padding is unused but it is crucial to maintain performance

// of this rate limiter in case of collocation with other frequently accessed memory.

padding [56]byte // cache line size - state pointer size = 64 - 8; created to avoid false sharing.

perRequest time.Duration

maxSlack time.Duration

clock Clock

}